Project Portfolio

This page contains a sampling of some of my favorite projects that I've been fortunate enough to be a part of over the years. It also features a few custom software tools and instruments that I've made as time allowed. Thanks for checking it out!

- Cheers, Daniel

- Cheers, Daniel

Moses - Beyond The Waves

Robot Recon

|

|

Summary:

Robot Recon is a third-person, playable game level, created using Unreal Engine 4 and Audiokinetic's Wwise middleware program to test various spatial audio rendering solutions and SDK's. By navigating the character through the environment's different spaces and interacting with both static and moving objects, it allows for rapid and effective evaluation of how well the target audio solution handles key elements such as sound object localization, occlusion and obstruction, room portals, reflections, and acoustic space simulations. Solutions tested include Unreal's audio engine, Wwise Spatial Audio, Dolby Atmos for gaming, Auro 3D, Windows Sonic, Oculus Audio, and Google Resonance. All of the visual assets in the level came from the UE Marketplace. The process also gave valuable insight into other aspects of the Unreal Engine and how visual elements like meshes, materials, skeletons, animations, blend spaces, locomotion, nav meshes, behavior trees, and sequences, among many other things, are created, implemented, and manipulated within the game engine. Role: Technical Sound Design / Game(level) Design |

Mass Effect: New Earth - A 4D Holographic Journey

|

|

Summary: Mass Effect: New Earth is a theatrical motion simulator amusement park ride at California's Great America in Santa Clara, California. It is an adaptation of the Mass Effect video game series and features recurring characters from the franchise. In the 4½-minute ride, guests sit in the 80-seat Action Theater with a custom 60-foot 3D LED screen with 4K resolution. The audience's motion seats are equipped to simulate wind and water and are equipped with leg pokers and neck ticklers. The ride features an 80-channel immersive surround sound system with custom audio beam forming speaker arrays inside the railing set pieces for each row. Guests also wear passive 3D glasses. Role: Principal Sound Design & Mix / Audio system spec & design |

"The People Upstairs - Joe Garrison and Night People"

Cry Out: The Lonely Whale Experience

Custom Software Tools & Instruments

Gesture Control 3D Sound Panner

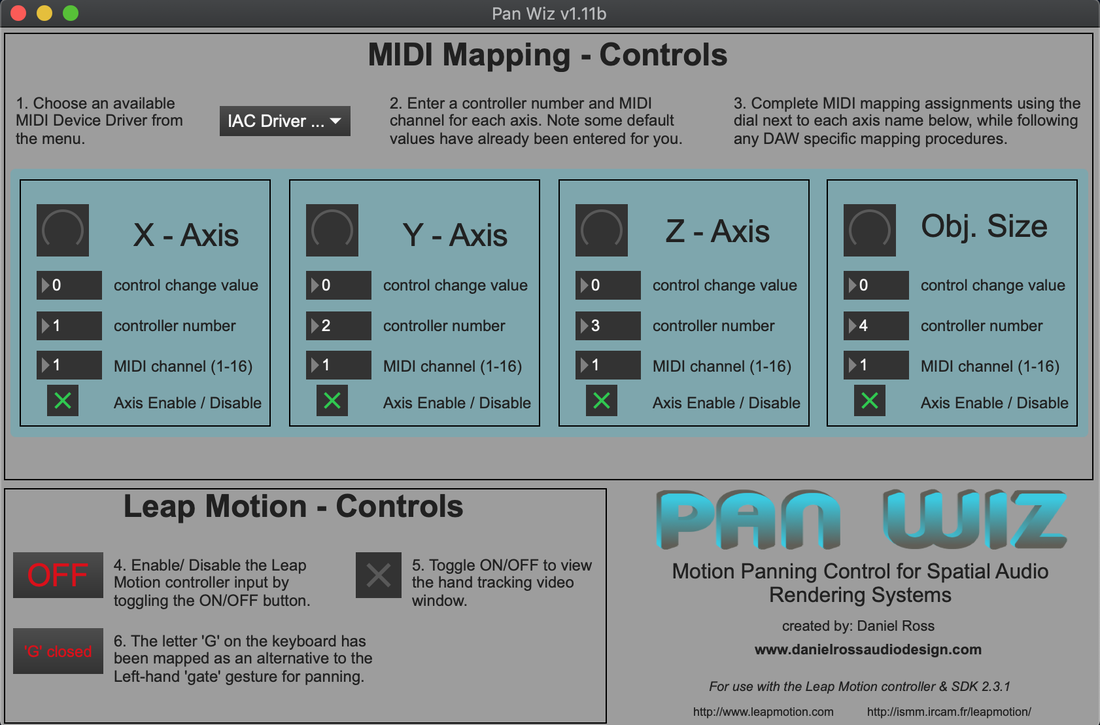

Pan Wiz

|

Summary: Pan Wiz was developed for Jon Anderson (lead vocalist - Yes) and Emmy-nominated composer, Sean Mckee, to use on their upcoming album project that uses Dolby Atmos spatial audio technology. It's a simplified version of the Gesture Control 3D Sound Panner (above) that takes the hand tracking data from the Leap Motion controller and converts it to MIDI data that can then be (easily) mapped to the Atmos Music Panner plug-in inside their DAW. Role: Audio Software Developer |

Groove Box: Drum Designer & Rhythm Sequencer

H.S.F (Hear.See.Feel) - An Exercise in Sensorial & Technical Connections

This multi-program patch patch was designed using Processing, Ableton Live, TouchOSC, and OSCulator. I wanted to enable an iPad to act as a drum machine which then triggers various strings and classes in Processing that displays words that are descriptive of the drum samples being played. Processing then takes the OSC data from TouchOSC(iPad) and passes it through to OSCulator which then translates the OSC data into MIDI which is then mapped to a drum rack in Ableton Live. The idea was not only about the visual and lexical relationship to the audio samples but also to explore routing possibilities between programs.